This section allows to manage the clustering service that provides a high availability for the load balancing services through two collaborative nodes in active-passive mode.

A cluster is mainly a 2 node group of devices working together to maintain the backends services always available and avoiding downtime of the services from a client point of view. Usually, there is a master and slave roles in a active-passive mode: the master is the node that is currently managing the services traffic to the backends and accepting the connections from the clients, the backup node knows all the configuration in real time in order to be ready to launch the services if they detect that the master node is not responding properly.

When the load balancing services are switched from one node to another, the backup node will take care of all the current connections and services status by itself in order to avoid that the client perform any interruption in the service.

Configure Cluster Service

This is the main page where to configure the Cluster. The clustering is composed by several services including:

Synchronization. This service permits to synchronize the configuration made in the master node to the slave mode automatically, so every change made in the configuration is replicated to the slave node and ready to take the control whenever is required. This service uses inotify and rsync through SSH in order to synchronize the configuration files in real time.

Heartbeat. This service permits to check the cluster nodes health status among all of them in order to detect quickly when a node is not performing correctly. This service relies in the VRRP protocol over multicast designed to be a lightweight and real time communication. Zevenet 5 uses keepalive in order to provide this service.

Connection Tracking. This service permits to replicate in real time the connections and states of them in order to be able to the backup node be able to resume the states of all the connections during a failover so the clients and backends connections won’t detect any connection disruption, using the conntrack.

Command Replication. This service permits to send and activate the configuration applied in the master node to the slave, but in a passive way so that during a failover task the slave will take the control and will launch all the networking, farms and resume the connections as soon as possible. This service is managed by zclustermanager through SSH.

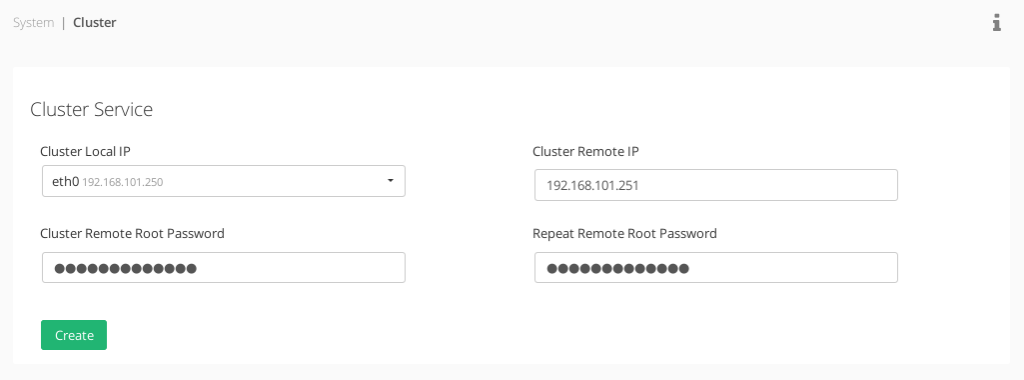

The Cluster configuration should be initiated from the future master node and requires the following data in order to be created:

Cluster Local IP. Drop down all the available network interfaces from where a cluster service can be created, no virtual interfaces allowed.

Cluster Remote IP. Remote IP address of the node that will behave as the future slave node.

Cluster Remote Root Password. Password of the root user of the remote (future slave) node.

Repeat Remote Root Password. Ensure that it’s the correct password by repeating the password.

After setting all the requested information click on the Create button and a confirmation that the cluster services are setup properly if there is communication between the nodes and no issues has been produced.

Show Cluster Service

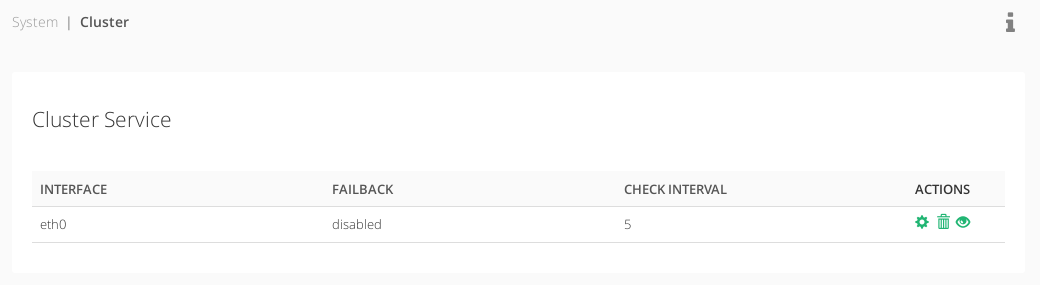

If the cluster service is already configured and active, the cluster shows the following information about the services, backends and actions to manage it.

INTERFACE. Network interface from where the cluster services have been configured.

FAILBACK. Set if during a failover the load balancing services should be returned to the master when it’s available again or maintain the current node as the new master. This option is useful when the slave node has less resources allocated than the master and the last should be the preferred master for the services.

CHECK INTERVAL. Time checks that the heartbeat service will use to check the status between the nodes.

ACTIONS. Network interface from where the .

- Configure. Change some available cluster settings.

- Unset. Disable the cluster between the given nodes.

- Show Nodes. Show the table nodes and their status.

- Reload. Refresh the nodes table and their status.

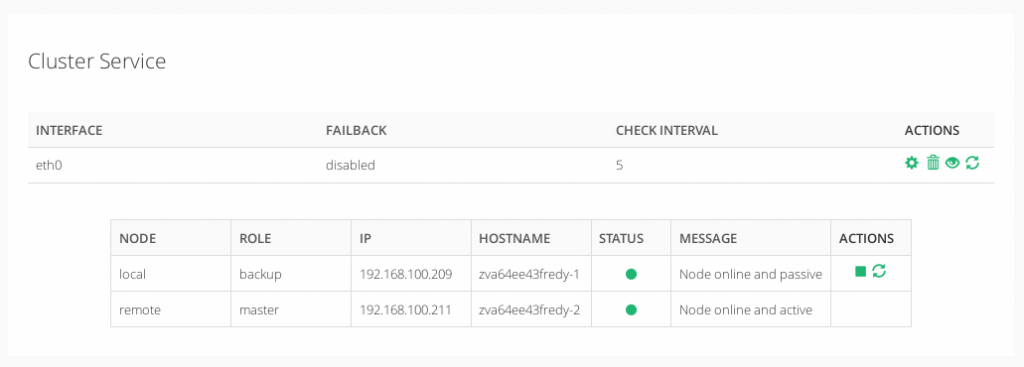

The Show Nodes action shows a table with:

NODE. For every node of the cluster show if it’s local or remote. It depends to which node you’ve connected through the web GUI, local will be the node that you’re currently connected and remote is the other node.

ROLE. For every node of the cluster show if it’s master, backup (also known as slave) or maintenance if it’s temporary disabled the node. It’ll depend on the role that the node has in the cluster.

IP. IP address of every node that compounds the cluster.

HOSTNAME. Host name of every node that compounds the cluster.

STATUS. The nodes status could be Red if there is any failure, Grey if the node is unreachable or not configured, Orange if it’s in maintenance mode or Green if it’s everything right.

MESSAGE. The message from the remote node, it’s a debug message of every node in the cluster.

ACTIONS. The actions available for every node are the following.

- Maintenance. Put in maintenance mode in order to disable temporary a cluster node in order to perform maintenance tasks and avoid a failover.

- Start. Put the cluster node online again after maintenance tasks.

- Reload. Refresh the cluster node status.

In the top of the web panel it’s shown a summarized status of the cluster local node. For example, status green and role master:

Another example, orange state and maintenance role: